Speech-to-Phrase brings voice home - Voice chapter 9

Welcome to Voice chapter 9 🎉 part of our long-running series following the development of open voice.

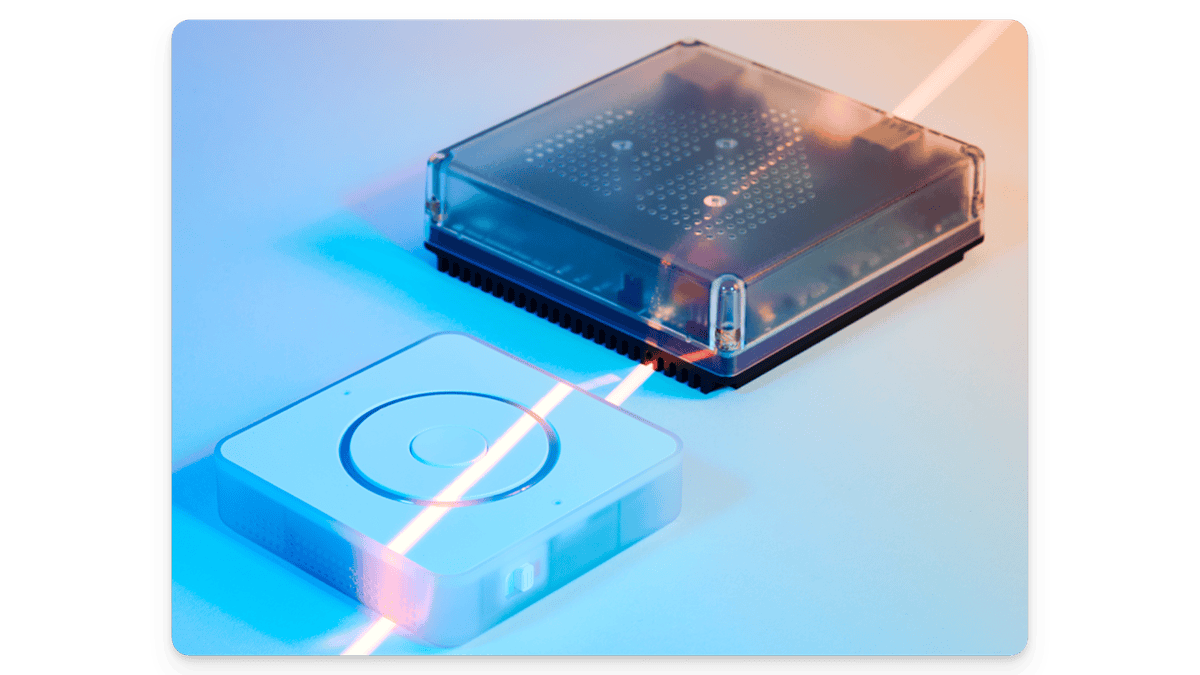

We’re still pumped from the launch of the Home Assistant Voice Preview Edition at the end of December. It sold out 23 minutes into our announcement - wow! We’ve been working hard to keep it in stock at all our distributors.

Today, we have a lot of cool stuff to improve your experience with Voice PE or any other Assist satellite you’re using. This includes fully local and offline voice control that can be powered by nearly any Home Assistant system.

- Voice for the masses

- Building an Open Voice Ecosystem

- Large language model improvements

- Expanding Voice Capabilities

- Home Assistant phones home: analog phones are back!

- Wyoming improvements

- 🫵 Help us bring choice to voice!

Dragon NaturallySpeaking was a popular speech recognition program introduced in 1997. To run this software you needed at least a 133 MHz Pentium processor, 32 MB of RAM, and Windows 95 or later. Nearly thirty years later, Speech-to-Text is much better, but needs orders of magnitude more resources.

Incredible technologies are being developed in speech processing, but it’s currently unrealistic for a device that costs less than $100 to take real advantage of them. It’s possible, of course, but running the previously recommended Speech-to-Text tool, Whisper

What’s more, advancing the development of Whisper is largely in the hands of OpenAI, as we don’t have the resources required to add languages to that tool. We could add every possible language to Home Assistant, but if any single part of our voice pipeline lacks language support, it renders voice unusable for that language. As a result, many widely spoken languages were unsupported for local voice control.

This left many users unable to use voice to control their smart home without purchasing extra hardware or services. We’re changing this today with the launch of a key new piece of our voice pipeline.

Voice for the masses

Speech-to-Phrase

The result: speech transcribed in under a second on a Home Assistant Green or Raspberry Pi 4. The Raspberry Pi 5 processes commands seven times faster, clocking in at 150 milliseconds per command!

With great speed comes some limitations. Speech-to-Phrase only supports a subset of Assist’s voice commands, and more open-ended things like shopping lists, naming a timer, and broadcasts are not usable out of the box. Really any commands that can accept random words (wildcards) will not work. For the same reasons, Speech-to-Phrase is intended for home control only and not LLMs.

The most important home control commands are supported, including turning lights on and off, changing brightness and color, getting the weather, setting timers, and controlling media players. Custom sentences can also be added to trigger things not covered by the current commands, and we expect the community will come up with some clever new ways to use this tech.

All you need to get started with voice

Speech-to-Phrase is launching with support for English, French, German, Dutch, Spanish, and Italian - covering nearly 70% of Home Assistant users. Nice. Unlike the local Speech-to-Text tools currently available, adding languages to Speech-to-Phrase is much easier. This means many more languages will be available in future releases, and we would love your help adding them!

We’re working on updating the Voice wizard to include Speech-to-Phrase. Until then, you need to install the add-on manually:

Building an Open Voice Ecosystem

When we launched Home Assistant Voice Preview Edition, we didn’t just launch a product; we kickstarted an ecosystem. We did this by open-sourcing all parts and ensuring that the voice experience built into Home Assistant is not tied to a single product. Any voice assistant built for the Open Home ecosystem can take advantage of all this work. Even your DIY ones!

With ESPHome 2025.2, which we’re releasing next week, any ESPHome-based voice assistant will support making broadcasts (more on that below), and they will also be able to use our new voice wizard to ensure new users have everything they need to get started.

This will include updates for the $13 Atom Echo and ESP32-S3-Box-3 devices that we used for development during the Year of the Voice!

Large language model improvements

We aim for Home Assistant to be the place for experimentation with AI in the smart home. We support a wide range of models, both local and cloud-based, and are constantly improving the different ways people can interact with them. We’re always running benchmarks

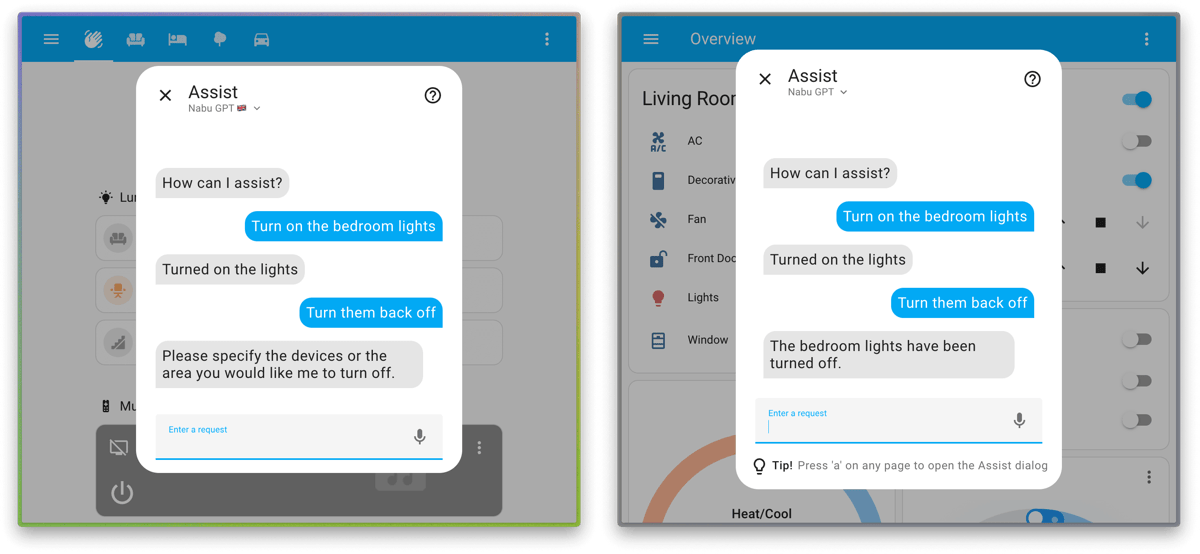

If you set up Assist, Home Assistant’s built-in voice assistant, and configure it to use an LLM, you might have noticed some new features landing recently. One major change was the new “prefer handling commands locally” setting, which always attempts to run commands with the built-in conversation agent before it sends it off to an LLM. We noticed many easy-to-run commands were being sent to an LLM, which can slow down things and waste tokens. If Home Assistant understands the command (e.g., turn on the lights), it will perform the necessary action, and only passes it on to your chosen LLM if it doesn’t understand the command (e.g., what’s the air quality like now).

Adding the above features made us realize that LLMs need to understand the commands handled locally. Now, the conversation history is shared with the LLM. The context allows you to ask the LLM for follow-up questions that refer to recent commands, regardless of whether they helped process the request.

Left: without shared conversations. Right: Shared conversations enable GPT to understand context.

Reducing the time to first word with streaming

When experimenting with larger models, or on slower hardware, LLM’s can feel sluggish. They only respond once the entire reply is generated, which can take frustratingly long for lengthy responses (you’ll be waiting a while if you ask it to tell you an epic fairy tale).

In Home Assistant 2025.3 we’re introducing support for LLMs to stream their response to the chat, allowing users to start reading while the response is being generated. A bonus side effect is that commands are now also faster: they will be executed as soon as they come in, without waiting for the rest of the message to be complete.

Streaming is coming initially for Ollama and OpenAI.

Model Context Protocol brings Home Assistant to every AI

In November 2024, Anthropic announced the Model Context Protocol

Using the new Model Context Protocol integration, Home Assistant can integrate external MCP servers and make their tools available to LLMs that Home Assistant talks to (for your voice assistant or in automations). There is quite a collection of MCP servers

With the new Model Context Protocol server integration, Home Assistant’s LLM tools can be included in other AI apps, like the Claude desktop app (tutorial

Thanks Allen!

Expanding Voice Capabilities

We keep enhancing the capabilities of the built-in conversation agent of Home Assistant. With the latest release, we’re unlocking two new features:

“Broadcast that it’s time for dinner”

The new broadcast feature lets you quickly send messages to the other Assist satellites in your home. This makes it possible to announce it’s time for dinner, or announce battles between your children 😅.

“Set the temperature to 19 degrees”

Previously Assist could only tell you the temperature, but now it can help you change the temperature of your HVAC system. Perfect for changing the temperature while staying cozy under a warm blanket.

Home Assistant phones home: analog phones are back!

Two years ago, we introduced the world’s most private voice assistant: an analog phone! Users can pick it up to talk to their smart home, and only the user can hear the response. A fun feature we’re adding today is that Home Assistant can now call your analog phone!

Analog phones are great when you want to notify a room, instead of an entire home. For instance, when the laundry is done, you can notify someone in the living room, but not the office. Also since the user needs to pick up the horn to receive the call, you will know if your notification was received.

If you’re using an LLM as your voice assistant, you can also start a conversation from a phone call. You can provide the opening sentence and via a new “extra system prompt” option, provide extra context to the LLM to interpret the response from the user. For example,

- Extra system context: garage door cover.garage_door was left open for 30 minutes. We asked the user if it should be closed

- Assistant: should the garage door be closed?

- User: sure

Thanks JaminH

Wyoming improvements

Wyoming is our standard for linking together all the different parts needed to build a voice assistant. Home Assistant 2025.3 will add support for announcements to Wyoming satellites, making them eligible for the new broadcast feature too.

We’re also adding a new microWakeWord add-on (the same wake word engine running on Voice PE!) that can be used as an alternative to openWakeWord. As we collect more real-world samples from our Wake Word Collective

🫵 Help us bring choice to voice!

We’ve said it before, and we’ll say it again—the era of open voice has begun, and the more people who join us, the better it gets. Home Assistant offers many ways to start with voice control, whether by building your own Assist hardware or getting a Home Assistant Voice Preview Edition. With every update, you’ll see new features, and you’ll get to preview the future of voice today.

A huge thanks to all the language leaders and contributors helping to shape open voice in the home! There are many ways to get involved, from translating or sharing voice samples to building new features—learn more about how you can contribute here. Another great way to support development is by subscribing to Home Assistant Cloud, which helps fund the Open Home projects that power voice.